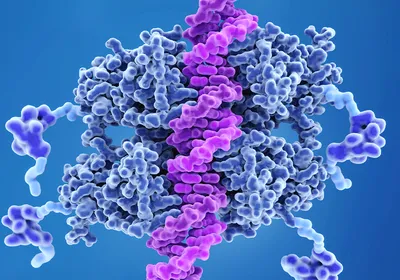

ABOVE: Precise genome editing tools transform how researchers investigate cancer mutations. Owen Gould

When scientists first began applying CRISPR for genome editing in human cells, it opened the floodgates for studying disease genetics. In those early days of editing with CRISPR-Cas9, precision oncology researcher Francisco Sánchez-Rivera was a graduate student investigating cancer genetics in Tyler Jacks’ laboratory at the Massachusetts Institute of Technology (MIT). In his graduate work, he was among the first to edit mouse genomes with CRISPR-Cas9 as he interrogated genetic drivers of lung cancer. These studies kickstarted Sánchez-Rivera’s interest in high-throughput genome editing, which fuels his current research at MIT.

In a recent Nature Biotechnology study, Sánchez-Rivera collaborated with biochemist David Liu at Harvard University to examine the most commonly mutated cancer gene: TP53, which encodes the guardian of the genome, tumor suppressor protein p53. They investigated thousands of p53 variants with a new high-throughput prime editing sensor library that quantitatively assesses how different mutations affect endogenous protein function.

Sánchez-Rivera and his team found that certain mutations cause phenotypes that differ from those observed previously in overexpression model systems, particularly those affecting the oligomerization domain that allows p53 proteins to form functional tetramers in cells. Sánchez-Rivera's work underscores the importance of endogenous gene dosage in tumor genetics research and establishes a framework for large-scale genetic variant interrogation for future precision medicine discoveries.

What inspired you to investigate cancer genetics with prime editing?

The CRISPR-Cas9 system is not well suited for engineering specific cancer-related mutations like single nucleotide variants, single nucleotide polymorphisms, insertions and deletions, and different types of chromosome rearrangements. During my postdoctoral training with cancer biologist Scott Lowe at Memorial Sloan Kettering Cancer Center, I shifted my efforts to precision genome editing technologies such as cytosine base editing and adenine base editing, initially developed in David Liu’s laboratory by Alexis Komor (now a biochemist at University of California, San Diego) and Nicole Gaudelli (now an entrepreneur at Google Ventures), respectively. Those technologies allowed us to engineer specific single nucleotide alterations in cell genomes and conduct in vivo somatic genome editing in mice. That led to repurposing CRISPR base editing for high-throughput functional genomics, which set the stage for our prime editing work. Prime editing was developed by David Liu and Andrew Anzalone (now the director of Prime Medicine), and it is really what I consider the holy grail of precision genome editing because it allows us to engineer virtually any type of mutation.

How do you measure genome editing during high-throughput CRISPR screens?

The original Cas9 nuclease guide RNA (gRNA) breaks the DNA and typically induces loss of function or inactivating mutations. In that context, gRNA counts collected by next generation sequencing provide an indirect measurement of a guide’s fitness-promoting properties. For base editing and prime editing, relying on gRNA counts in a cell is not as accurate or quantitative. We developed sensor libraries to mitigate those challenges.

What are sensor libraries and how do they work?

It allows us to simultaneously quantify the editing efficiency and precision of each pegRNA within a library as it is happening in cells.

-Francisco Sánchez-Rivera, Massachusetts Institute of Technology

Sensor libraries are constructs that encode a gRNA and a synthetic version of the target site in the same construct. Even though it is a synthetic target site that we design and clone, in the context of a sensor library, that target site is designed to mimic the endogenous site as closely as possible. We include the target site and flanking DNA sequences, which are based on the sequence of the endogenous locus.

We previously showed that we could get an indirect measurement of the base editing events that were happening endogenously by sequencing this synthetic target site in the construct during a high-throughput screen.2 In this study, we took a page from that book and built a prime editing sensor library.1

The prime editing guide RNA (pegRNA) is a more complicated construct in general, but the concept is the same. If we introduce these libraries into cells that express prime editing machinery, the pegRNA within the construct will edit its target site in the sensor, and if the libraries are delivered into cells of the same target species, the pegRNA will edit the endogenous genomic site. That is powerful because it allows us to simultaneously quantify the editing efficiency and precision of each pegRNA within a library as it is happening in cells.

Why did you focus on p53 when developing prime editing sensor libraries?

We used p53 as a prototype for several reasons. The gene that encodes p53 is the most commonly mutated cancer gene, and there is still a lot of controversy over what these mutations are doing.3

Every human cell starts with two wild type p53 copies. Typically, the first event that happens when a cell acquires a p53 mutation is a point mutation in one copy, followed by a loss of heterozygosity event, which mutates or loses the second TP53 gene copy. A number of studies have employed exogenous cDNA-based mutagenesis or deep mutational scanning approaches to overexpress different mutants that span the TP53 gene. These studies have been done in cells that express wild type p53, such as the lung adenocarcinoma cells that we used in our study.

In genetic terms, the only property that can be measured through that approach is dominant negative activity, meaning that the mutant is introduced in the context of the wild type tetramer, and dominant negative activity interferes with that wild type p53 pool. But there are certain mutants that may have other properties, not only dominant negative activity.

We wanted to reach the point where we could rigorously test this model, and this work is just the beginning. With prime editing, we engineered endogenous mutations, maintaining the stoichiometry, gene dosage, and native way in which these mutations arise and progress. This method also allowed us to establish the technology very rigorously because we applied strong selection for cells with impaired p53 activity, so we quickly got an idea of whether the technology is robust and scalable.

What are the future directions?

This field moves quickly but we sometimes suffer from it moving so quickly that we forget to do the most important experiments. Mechanistically interrogating these mutants is definitely part of our next steps. We are going back to mouse models to engineer different mutation types in various tissues to understand what they are doing in vivo. In addition to modeling individual mutations, we are looking forward to doing multiplex prime editing in the context of a higher throughput genetic experiment in mouse models, just as we did in cells.

This interview has been edited for length and clarity.

- Gould SI, et al. High-throughput evaluation of genetic variants with prime editing sensor libraries. Nat Biotechnol. Published online March 12, 2024. doi:10.1038/s41587-024-02172-9

- Sánchez-Rivera FJ, et al. Base editing sensor libraries for high-throughput engineering and functional analysis of cancer-associated single nucleotide variants. Nat Biotechnol. 2022;40(6):862-873.

- Huang J. Current developments of targeting the p53 signaling pathway for cancer treatment. Pharmacol Ther. 2021;220:107720.